Quad9 Threat Blocking

TL; DR: Bad python code caused bogus result summaries in tests on our Quad9 service, and incomplete methodology renders even those results questionable. The updated result looks much better!

Yesterday, there was a posting by an Australian cryptography site that compared several DNS blocklist providers against each other. The title was “How to Pick The Best Threat-Blocking DNS Provider.”

Ten DNS providers were compared against a public-domain blocklist, including Quad9. Surprisingly, we ended up with a result of zero – no blocks of any kind. This was both surprising and pretty clearly false data (we provide millions of blocks for our client base on a daily basis) so we knew this was incorrect and took a closer look.

We found that there are a few problems with this test. First, the results for Quad9 are entirely incorrect. The good news is that the author made his code open-source, which allowed an examination of where it had faults. There were some flaws that caused Quad9’s return methods (NXDOMAIN) to be entirely ignored, giving us a zero result. We will be submitting the code changes back to the DiNgoeS project, though as we indicate below this is not sufficient to make the test statistically rigorous for any results to be used for decisions (see the second point below.)

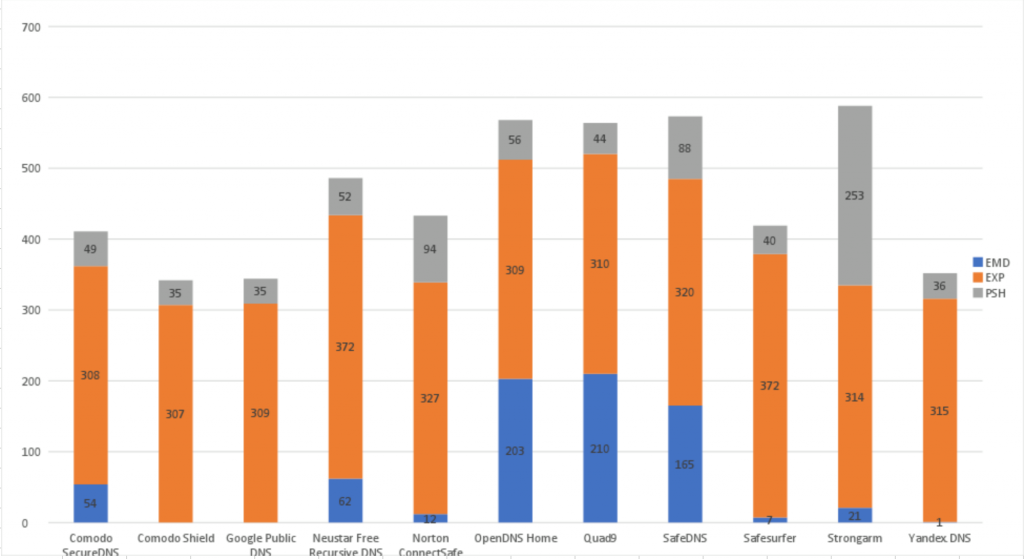

After patching for a correct response recording, Quad9 came in with vastly different results, putting us in 4th “place” overall for the number of blocks in all three categories:

In Malware (EMD) feed, Quad9 got 210 out 500 (42.0%)

In Phishing (PSH) feed, Quad9 got 44 out 500 (8.8%)

In Exploits (EXP) feed, Quad9 got 310 out 500 (62.0%)

Note that we have not added anything specifically from this test list to our blocklist between when the article was first published and when we ran our tests, other than what “naturally” has moved into the blocklist due to normal threat mitigation analysis. We’re not cherry picking.

So this puts Quad9 (using the rankings of this test) at #4 in the ranking, but the comparison between places 1 through 4 is VERY tight – only 4% difference between #1 and #4. Yandex also moved from having only 10 results to having 351 in our runs of the test, which might be another serious methodology flaw that we did not investigate further. These statistical quirks bring us to the more concerning point, which is:

This test is biased to public data that may or may not be correct as far as the baseline information. There are probably items in this list that are actually not malware, phishing, or exploit sites and are incorrectly included. This is called a “false positive” and keeping false positives low is of critical importance to anyone running a blocking system of any sort. Quad9 maintains a very low tolerance for false positives since we know that people are likely to leave the system if they can’t resolve a legitimate site. Each DNS blocking system has its own tolerance for false positives – this is not unique to Quad9.

The fact that a site does not meet our blocklist criteria and yet is on this public list is not completely indicative of a “missed” threat – it may be that the threat was determined to not be a threat. Indiscriminate blocking of sites with low threat signals may be more harmful than good, and it’s not entirely clear that using this list (or any list, really) is going to easily create a meaningful comparison when blocklist providers are within some reasonable standard deviation from each other. Comparing the effectiveness of blocking strategies is incredibly difficult, and while this method might show broad protection capabilities, if it is used to compare “success” of providers against each other, then we would probably not use a set of testing criteria that was this simplistic. In short: if we’re all being compared against a standard, then the standard needs to be understood much better, and the code needs to be reviewed more rigorously before public results are published.

One of the important things that sets Quad9 apart from other sources of blocklist data is that we do not have a single-source model for our threat intelligence stream. We have 19 different partners or sources of blocklist data, and from those, we compile a validated and high-confidence list of sites to include in our blocking feed. IBM X-Force is one of those providers that was called out in the article as a contributor to Quad9, but there are many others in the mix who provide significant value to the system. If a threat is missed by one provider, it may be picked up by another. Some providers specialize in phishing, some in malware, some in command-and-control systems. We evaluate and include the data we think is most useful to protect the end user, and we evaluate the risks of false positives to minimize user interruption.

We have informed the author of the code faults, and hope that at a minimum there is an update to reflect the true values. We welcome the effort to quantify the usefulness of DNS-based blocking services, but we’d hope that there is a more rigorous approach to this comparison in future iterations.

You can see a summary of our results: